Automate Election Night Returns Scraping With AWS Lambda

Posted on Sun 26 June 2022 in Election Modeling

To build our ‘Election Margin Projection Model’, we need to capture election night vote counts. Unfortunately, most archives of election results only record final vote tallies, but we need vote count as a time series during the tally process throughout election night(or election weeks as has been the custom lately). To capture our election tally time series, a serverless AWS stack will be deployed to automate election night ballot return captures from 2 reporting sources

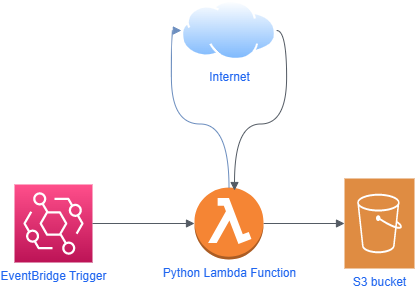

Architecture

The python code to capture election night data will be hosted on an AWS serverless lambda function. An evenBridge trigger will execute the code every minute, and each election capture will be saved into on an s3 bucket.

Taking the above architecture, and starting backwards with the S3 storage, we create necessary resources for the election scraping operation.

Storage

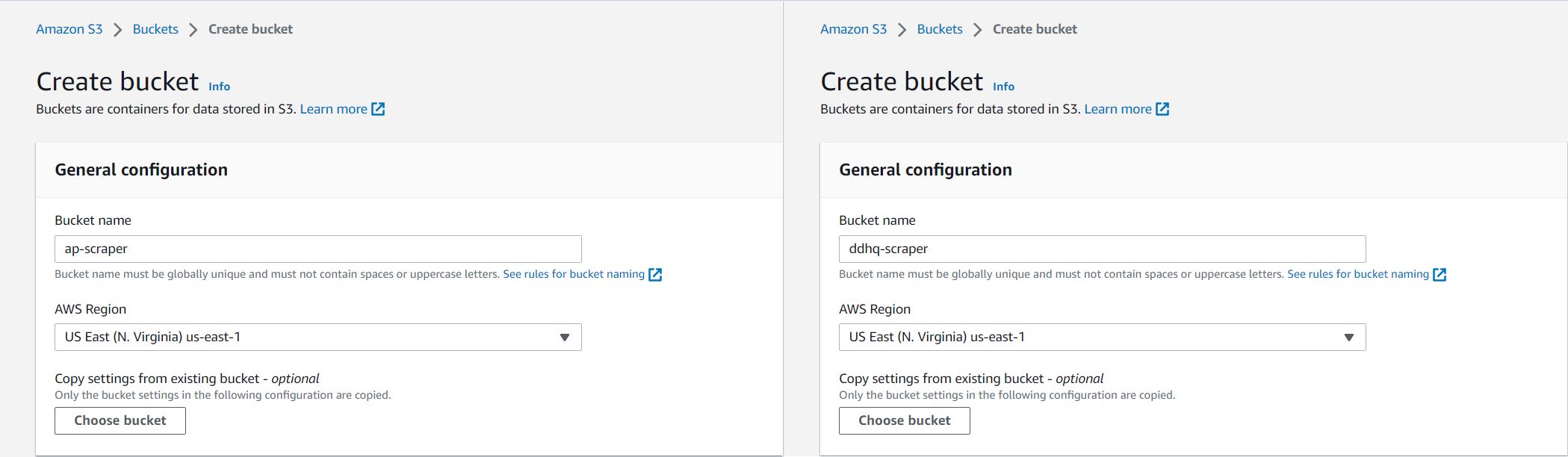

2 S3 buckets will be created to store data for both reporting sources. Modify the names as needed if you're replicating this process, as bucket names must be unique. * ddhq-scraper * ap-scraper

We create two buckets with above names in the same region, and we'll keep the rest of the default settings.

below is the folder structure we'll be using to store our election captures

└── Year/

└── State/

└── Office/

├── Primary/

│ └── Party

├── General

└── Runoff

the folder path is the index of the election being captured, for example

ddhq-scraper/2022/GA/ussenate/general/

is an election capture of the 2022 Senate general election in the state of Georgia, and each file in this folder is a tally snapshot during election night.

Lambda Code

Regardless of the reporting source or particular election event, the template for parsing and storing each capture can be described with the below template:

set 'elections' <- request elections data from reporting source url

for each 'election' in: 'elections' do

set 'election path' <- get s3 path from 'election'

if ETag of 'election' not in: get all ETags from 'election_path'

save 'election' to 'election path' with file name <Epoch Time>_<ETag Hash>.json

Implementations of the above pseudocode can be found here, but we'll go through the common code below.

A python function to capture and store election night results will run every minute on a scheduler regardless if election results have been updated or not, so the file name of each election capture will preferably have two properties for easy indexing

- A timestamp to easily sort the data in a time series.

- A hash of file contents to easily know if the captured election results have been updated since the last time the code ran

both these properties can be derived from the metadata of each file, but having these values in the file name makes things simpler. Each file is in the format of:

<Epoch Time>_<ETag Hash>.json

The Epoch time and ETag hash are calculated with the below functions

def calculate_s3_etag(data):

md5s=hashlib.md5(data)

return '"{}"'.format(md5s.hexdigest())

def curr_epoch():

return int(time.mktime(time.gmtime()))

after parsing and formatting election data, we calculate ETag using md5 algorithm with the above code, and we compare it to ETags of existing captures stored in S3.

def get_s3_etag(bucket, prefix):

"""Get a list of keys in an S3 bucket."""

s3 = boto3.client('s3')

resp = s3.list_objects_v2(Bucket=bucket, Prefix=prefix)

if 'Contents' in resp.keys():

return [ obj['ETag'] for obj in resp['Contents']]

else:

return([])

etags = get_s3_etag(bucket_name,prefix)

if etag not in etags:

s3_path = prefix + str(curr_epoch())+"_"+etag[1:-1]+'.json'

s3.Bucket(bucket_name).put_object(Key=s3_path, Body=election_body)

Below is the full code for election night capture of AP data on november 8th, 2022. The rest of the examples will reference this AP capture.

import boto3

import time

import hashlib

import json

from urllib.request import urlopen, Request

client = boto3.client('ssm')

def lambda_handler(event, context):

url = Request('https://interactives.ap.org/election-results/data-live/2022-11-08/results/national/progress.json',headers={'User-Agent': 'Mozilla/5.0'})

s3 = boto3.resource("s3")

bucket_name = "ap-scraper"

js=json.loads(urlopen(url).read())

for key, race in js.items():

url=Request(f"https://interactives.ap.org/election-results/data-live/2022-11-08/results/races/{race['statePostal']}/{race['raceID']}/detail.json")

election=json.loads(urlopen(url).read())

url=Request(f"https://interactives.ap.org/election-results/data-live/2022-11-08/results/races/{race['statePostal']}/{race['raceID']}/metadata.json")

election['metadata']=json.loads(urlopen(url).read())

election_body=json.dumps(election).encode('utf-8')

etag=calculate_s3_etag(election_body)

prefix='2022/'

prefix=prefix+election['metadata']['statePostal']+'/'

if election['metadata']['officeID'] == 'H':

prefix=prefix+'ushouse'+election['metadata']['seatNum']+'/'

prefix=prefix+election['metadata']['raceType'].lower().replace(' ','_')+'/'

elif election['metadata']['officeID'] == 'S':

prefix=prefix+'ussenate/'

prefix=prefix+election['metadata']['raceType'].lower().replace(' ','_')+'/'

elif election['metadata']['officeID'] == 'G':

prefix=prefix+'governor/'

prefix=prefix+election['metadata']['raceType'].lower().replace(' ','_')+'/'

else:

prefix=prefix+election['metadata']['raceType'].lower().replace(' ','_')+'/'

seatName=election['metadata']['seatName']

prefix=prefix+''.join(filter(lambda a : str.isalnum(a) or a==' ', seatName))+'/'

prefix=prefix.replace(' ','_')

etags = get_s3_etag(bucket_name,prefix)

if etag not in etags:

s3_path = prefix + str(curr_epoch())+"_"+etag[1:-1]+'.json'

s3.Bucket(bucket_name).put_object(Key=s3_path, Body=election_body)

def get_s3_etag(bucket, prefix):

"""Get a list of keys in an S3 bucket."""

s3 = boto3.client('s3')

resp = s3.list_objects_v2(Bucket=bucket, Prefix=prefix)

if 'Contents' in resp.keys():

return [ obj['ETag'] for obj in resp['Contents']]

else:

return([])

def curr_epoch():

return int(time.mktime(time.gmtime()))

def calculate_s3_etag(data):

md5s=hashlib.md5(data)

return '"{}"'.format(md5s.hexdigest())

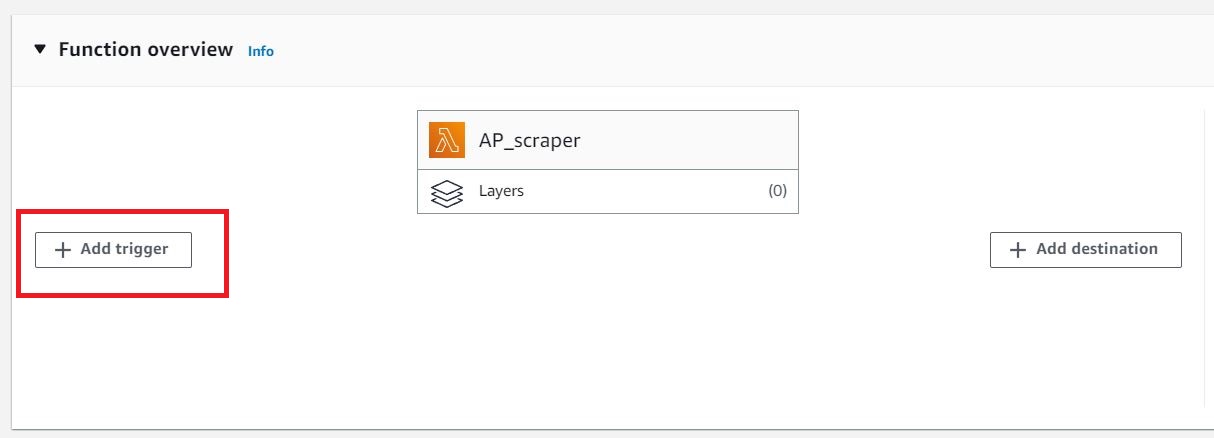

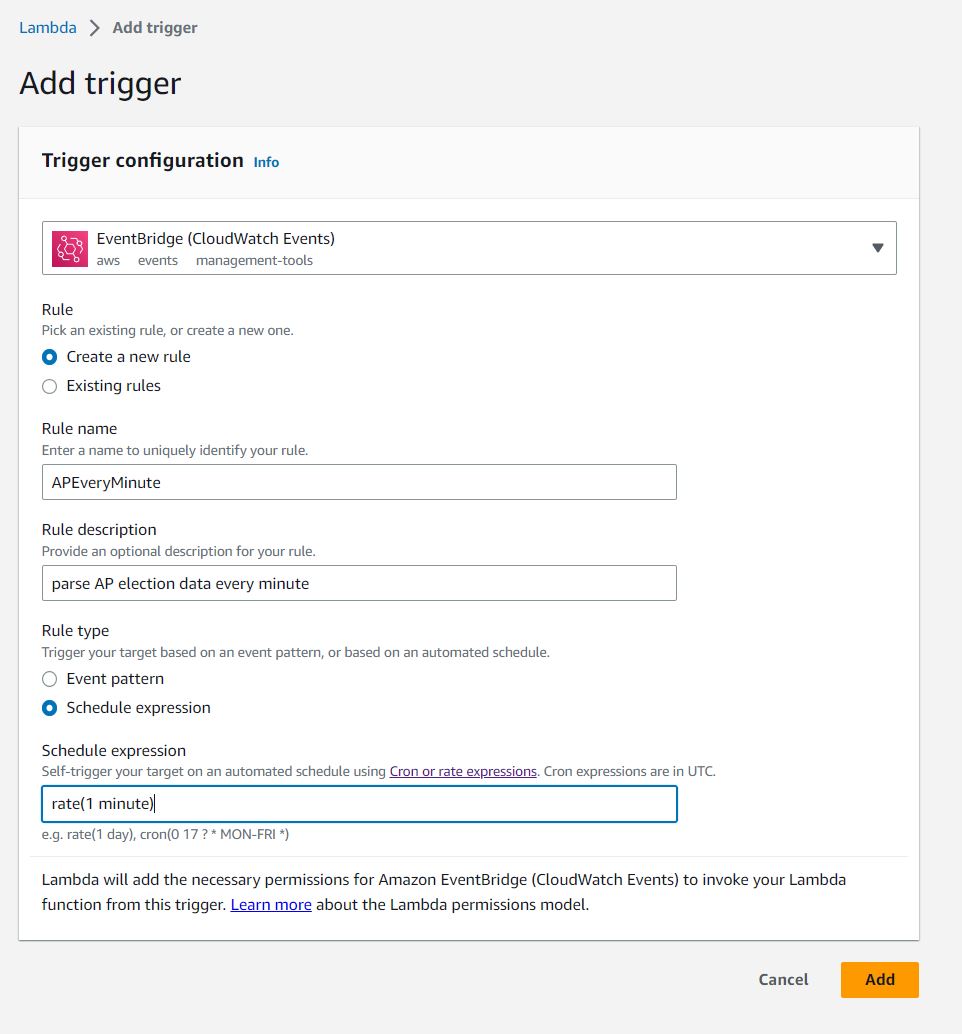

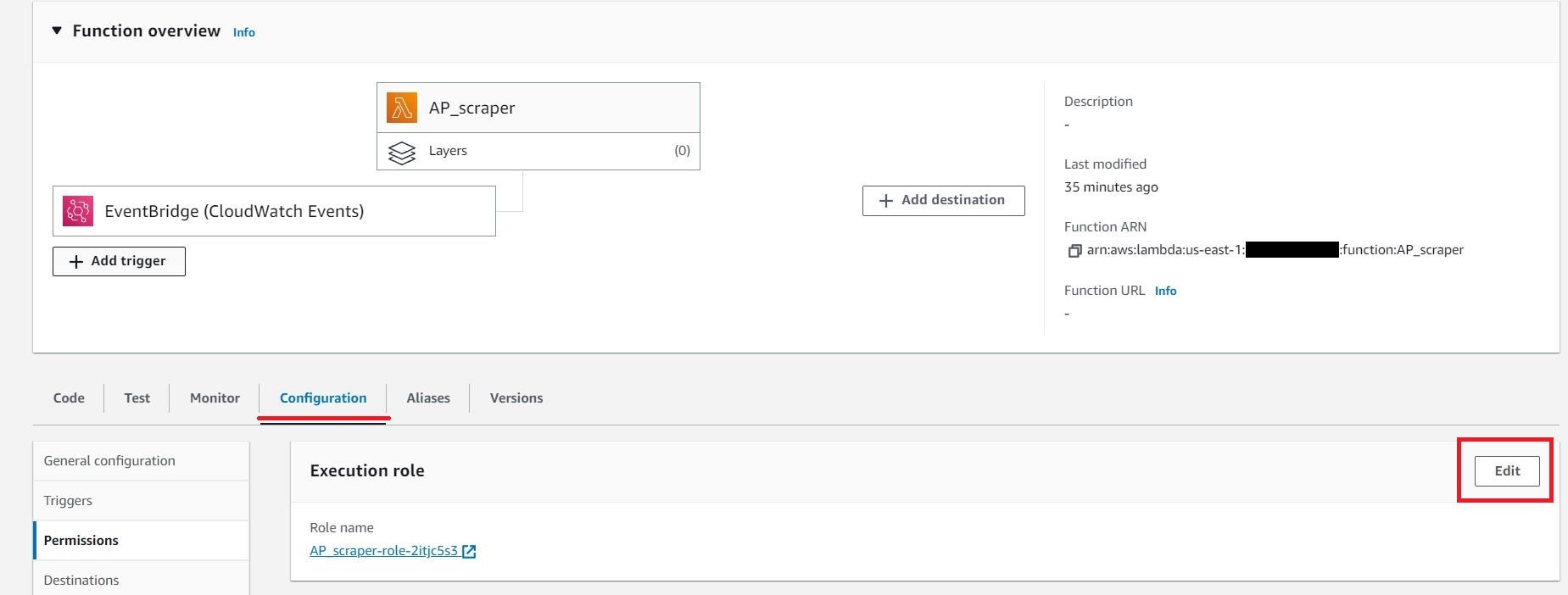

EventBridge Trigger

In the lambda interface, we can add a trigger to our lambda function

We'll schedule our lambda function to run every minute.

The above takes care of associating the EventBridge trigger with our lambda function, and all associating permissions between the two, but we still need to set correct permissions so our lamdba function can access the S3 bucket.

Permissions

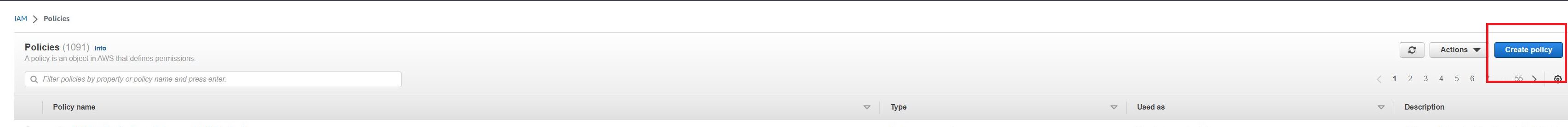

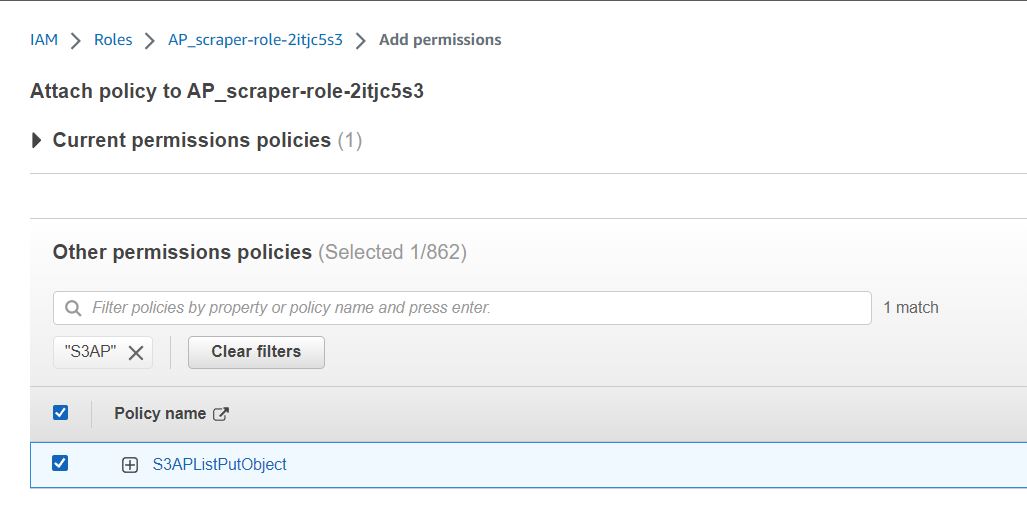

Finally, to tie up loose ends, we need to attach a policy to our lambda's execution role so our function can access the S3 bucket. First, from the IAM menu, we create a new policy granting permission to our bucket. We'll name our policy S3APListPutObject.

Our lambda function needs the below two permissions to access the S3 bucket. * ListBucket * PutObject

and below is a sample of what the json will look like to access our 'ap-scraper' bucket

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"s3:ListBucket",

"s3:PutObject"

],

"Resource": "arn:aws:s3:::ap-scraper"

}

]

}

Edit the lambda's execution role.

and attach the new S3APListPutObject policy to our lambda's execution role.

Conclusion

We've demonstrated a quick and dirty method of capturing election night tally progression. This method puts the raw election captures in S3, and in future works, we'll investigate building our ‘Election Margin Projection Model’ based on the data we captured to predict future election night margin.